The GPU Memory Hierarchy: L2, L1, and Registers

September 19, 2025

While High Bandwidth Memory (HBM) provides GPUs with massive throughput, it is still relatively far away from the compute units in terms of latency. To bridge that gap, modern GPUs employ a layered memory hierarchy: L2 cache, L1 cache (shared memory), and registers. Each layer trades capacity for speed, ensuring that the most frequently used data stays as close to the compute units as possible.

Why this Memory Hierarchy Matters

Training and inference involve repeatedly accessing weights, activations, and intermediate results. Fetching all of this directly from HBM would overwhelm its bandwidth and introduce unacceptable delays. By staging data through progressively faster and smaller memories, GPUs reduce both latency and energy consumption, keeping tensor cores and CUDA cores consistently fed.

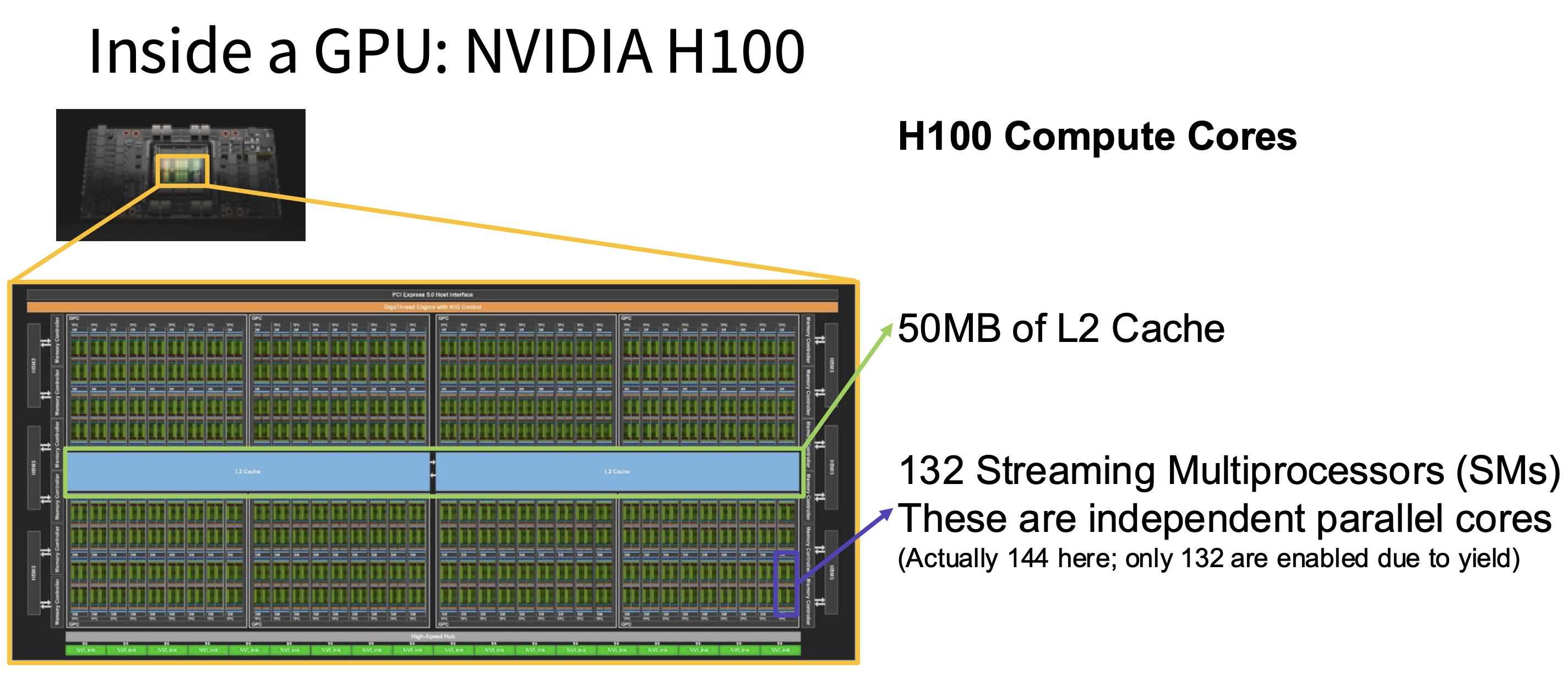

Figure 1: The inside of the compute core of the NVIDIA H100 GPU. We see 50MB of L2 cache as well as 132 streaming multiprocessors stacked next to each other.

L2 Cache - Global Buffer for All Streaming Multiprocessors (SMs)

- Location and Scope: The L2 cache sits on the GPU die but outide the SMs as shown in figure 1. It is shared across the entire GPU meaning that all SMs can access it.

- Size: Typically tens of megabytes. For example, NVIDIA A100 has 40 MB of L2, H100 has 50 MB (figure 1).

- Access Latency: Much faster than HBM (tens of nanoseconds vs hundreds), but slower than L1.

-

Function:

- Stores recently accessed data from HBM.

- Enables reuse across SMs: if multiple SMs need the same weights, one fetch from HBM is enough.

- Helps balance traffic: reduces direct bandwidth pressure on HBM.

-

Impact on ML:

- Large batch sizes or shared weights across layers benefit because data can stay in L2 across multiple SMs.

- A larger L2 improves throughput for workloads with high reuse across SMs.

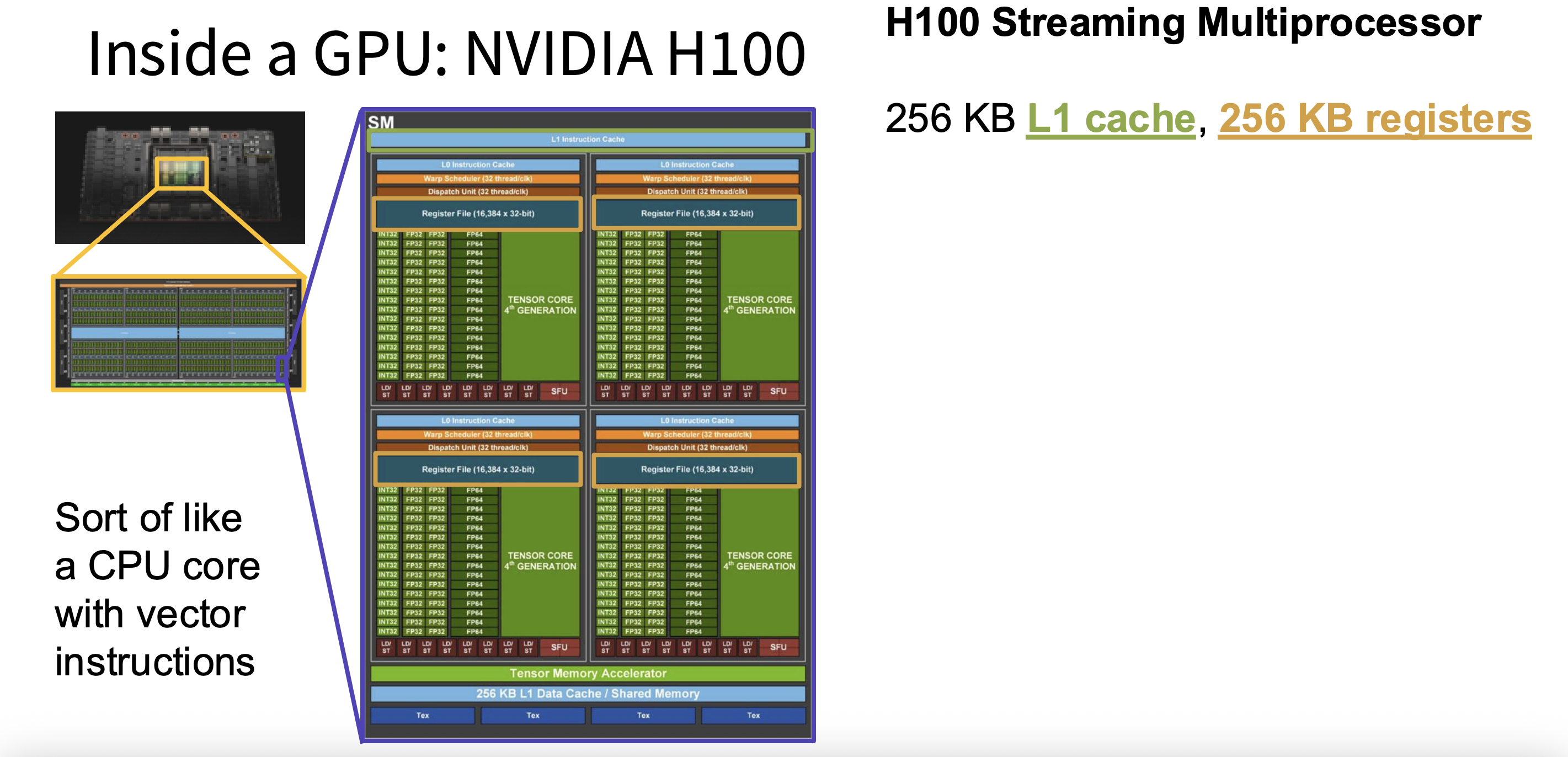

Figure 2: Inside the streaming multiprocessor (SM) of an H100, where we see the L1 instruction cache above.

L1 Cache - L1 Cache (Shared Memory) — Local Fast Storage in Each SM

- Location and scope: Inside each SM (figure 2), private to that SM.

- Size: Around 128 KB per SM (sometimes split between cache and shared memory, configurable in CUDA).

- Access latency: Lower than L2, typically a few nanoseconds.

-

Dual role:

- As a cache: Automatically stores frequently used data loaded from L2 or HBM.

- As a shared memory: Can be explicitly managed by the programmer in CUDA, allowing threads in a block to share data directly.

-

Why it's critical:

- Reduces redundant global memory loads by letting threads reuse values locally.

- Essential for tiled algorithms (e.g., matrix multiplication): input sub-tiles are stored once in shared memory, then reused by many threads.

-

Impact on ML:

- CNN kernels and GEMMs rely heavily on shared memory for efficient data reuse.

- Without it, every thread would repeatedly fetch the same values from L2/HBM, wasting bandwidth.

Registers — Per-Thread Working Memory

- Location and scope: Located directly inside each SM, tied to CUDA cores and tensor cores. Each thread has its own registers, invisible to other threads.

- Size: Huge in aggregate (e.g., A100 has 19 MB of registers), but limited per thread (commonly 32–256 registers/thread).

- Access Latency: 1 cycle — the fastest memory in the GPU.

-

Function:

- Hold operands for the current instruction (e.g., matrix elements in an MMA).

- Store loop counters, addresses, and intermediate sums.

- All arithmetic instructions operate on values in registers.

-

Constraints:

- If a thread's demands exceeds availible registers, values "spill" into L1/L2, which is much slower

- Register usage affects occupancy: more registers per thread → fewer threads can be active on an SM.

-

Impact on ML:

- Efficient use of registers ensures tensor cores are continuously fed without stalling.

- Kernel optimization often involves balancing register use against thread occupancy for maximum throughput.

How They Work Together in Large-Scale Training

Imagine training a transformer with billions of parameters. Here’s how the data moves:

-

Step 1: Load from HBM

- Model weights, activations, and optimizer states are stored in HBM.

- The GPU requests the relevant chunks.

-

Step 2: Stage in L2 Cache:

- The fetched weights are stored in L2, making them available to all SMs.

- If another SM needs the same weights, it can pull from L2 instead of HBM.

-

Step 3: Move into L1/Shared Memory

- Within an SM, warps of threads copy sub-tiles of the matrices into L1 (as shared memory).

- Multiple threads reuse this data to perform tiled matrix multiplications efficiently.

-

Step 4: Registers for Execution

- Each thread loads its slice of the data into registers.

- The tensor cores or CUDA cores consume the register values to perform multiply-accumulate operations.

- Intermediate results also live in registers until they’re written back.

-

Step 5: Write Back

- Results move back up the hierarchy: registers → L1/shared → L2 → HBM.