Streaming Multiprocessors: Scheduling and Execution

September 20, 2025

Modern GPUs achieve their massive parallel performance through Streaming Multiprocessors (SMs). An SM is the fundamental compute block of the GPU, containing everything needed to execute thousands of threads in parallel: cores for arithmetic, fast local memory, registers, and schedulers. By replicating SMs across the GPU die, manufacturers scale performance from tens of gigaflops into the multi-petaflop range. For example, NVIDIA’s A100 GPU integrates 108 SMs, while the newer H100 contains 132. Understanding the role of SMs is critical to understanding why GPUs excel at deep learning and other workloads that demand large-scale parallelism.

Why SMs Matter for Machine Learning

Streaming multiprocessors are central to GPU efficiency because they:

- Provide the execution environment for tens of thousands of threads.

- Contain both general-purpose CUDA cores and specialized tensor cores .

- Manage fast local storage through registers and shared memory.

- Include schedulers that allow thousands of threads to be interleaved, hiding memory latency.

Without SMs, the GPU’s cores would be isolated units. SMs bring them together into coordinated, highly parallel engines.

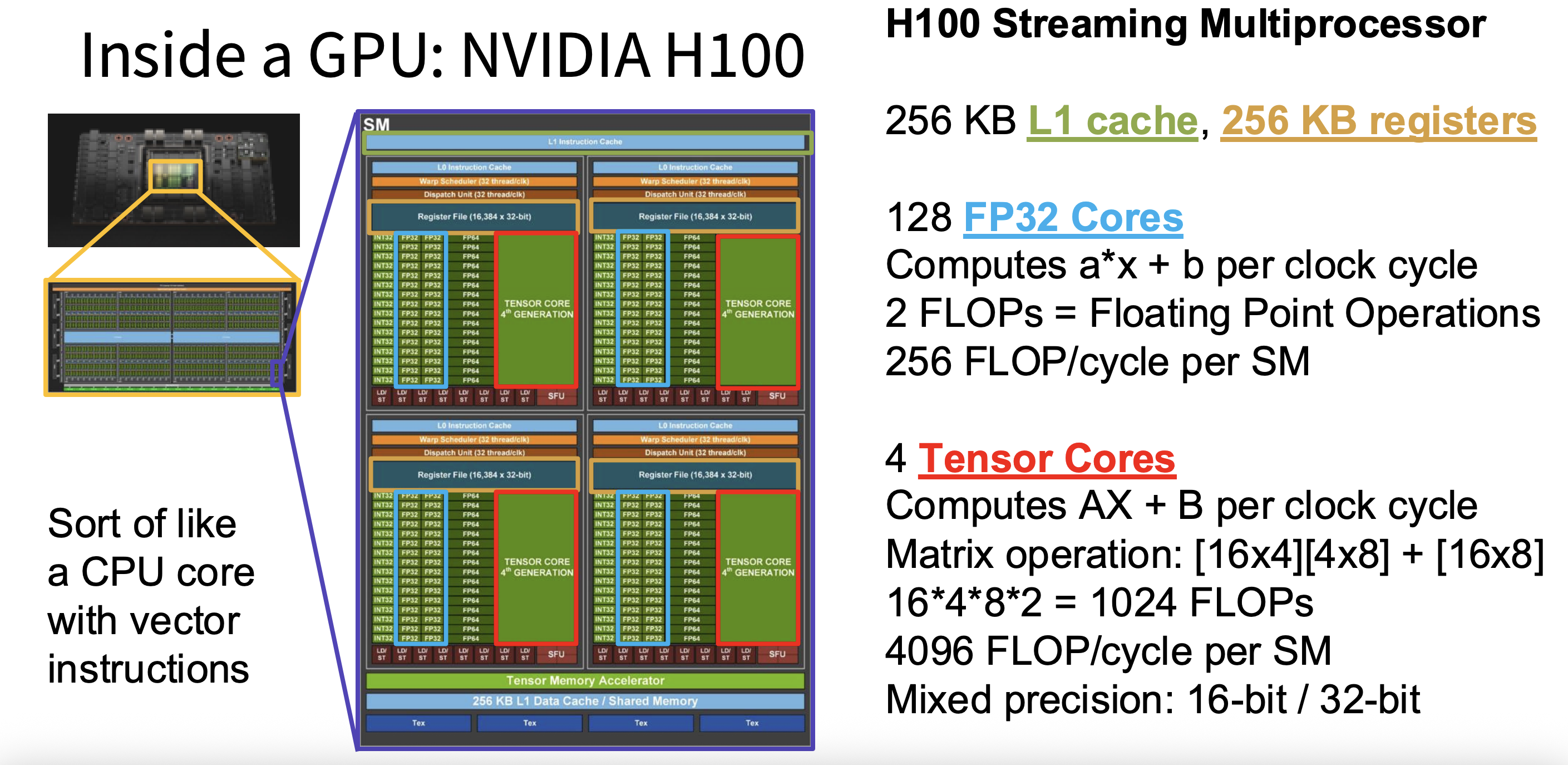

Figure 1: H100 SM floorplan highlighting each warp scheduler’s resources—256 KB L1/SMEM, 16k-register files, 128 FP32 ALUs, and four 4th-gen tensor cores delivering 4,096 mixed-precision FLOPs per cycle.

Anatomy of an SM

Each SM integrates a variety of hardware resources that work together to execute threads efficiently:

- CUDA cores: General-purpose FP32, FP64, and integer units that perform scalar and vector arithmetic. These handle a wide range of tasks, from indexing and control flow to basic computations.

- Tensor cores: Specialized units designed for matrix multiply-accumulate operations (e.g., . Tensor cores operate on small matrix tiles in reduced precision (FP16, BF16, FP8) with accumulation in FP32. This design enables hundreds of floating-point operations per cycle and provides the massive throughput required for modern neural network training.

- Registers: The fastest memory available, located inside the SM. Registers are private to each thread and store the immediate values being used in computations. All arithmetic operations are performed on register values.

- Shared memory / L1 cache: A small but fast memory region inside the SM, shared across all threads in a block. It can act as a cache or as programmer-managed shared memory in CUDA. This is especially important for tiled matrix multiplications, where multiple threads reuse the same data.

- Warp schedulers: Each SM has multiple schedulers that dispatch instructions from groups of 32 threads known as warps. These schedulers ensure that while one warp waits for data, another can execute, effectively hiding memory latency.

- Load/store units: Handle memory traffic between the SM and higher levels of the hierarchy (L2 cache and HBM).

Together, these resources form a self-contained compute cluster. The GPU die is then built by replicating SMs, enabling the scaling that makes GPUs effective for workloads ranging from graphics to deep learning.

Parallel Execution in an SM

One of the defining features of an SM is its ability to manage massive numbers of threads. Threads are grouped into warps, and warps are organized into thread blocks. An SM can run multiple blocks at once, with each warp scheduler interleaving instructions across warps to keep the cores busy.

This design allows GPUs to hide latency . For example, when one warp is stalled waiting for data from memory, the scheduler can immediately switch to another warp that is ready to execute. This rapid context switching is lightweight and enable the SM to keep its CUDA and tensor cores operating near peak throughput.

In a typical deep learning kernel:

- A tile of weights and activations is loaded from L2 or HBM into shared memory.

- Warps of threads copy elements into registers.

- Tensor cores perform multiply-accumulate operations on the register values.

- Intermediate results are stored back in registers or shared memory.

- Final results are written to L2 and eventually back to HBM.

Real World Implications for ML

- Scaling with SM count: More SMs mean more parallel execution. This is why newer GPUs with higher SM counts can train larger models or process more data per unit time.

- Kernel design constraints: Register usage, shared memory allocation, and warp scheduling directly impact how many threads can be active on an SM (occupancy). Poor tuning can leave cores underutilized even if theoretical FLOP capacity is high.

- Efficiency through balance: The interaction of compute units and memory resources inside each SM determines whether a kernel is compute-bound or memory-bound. Optimizing this balance is a key part of GPU programming.