High Bandwidth Memory (HBM): Why GPUs Need It for Machine Learning

September 18, 2025

Introduction

High Bandwidth Memory (HBM) is the primary memory technology used in modern data center GPUs, including NVIDIA’s A100 and H100, as well as AMD’s MI250. Unlike traditional DDR or GDDR memory, HBM is physically stacked right next to the GPU die and connected through a silicon interposer. This design delivers both high capacity and extremely high bandwidth — key requirements for training today’s large-scale machine learning models.

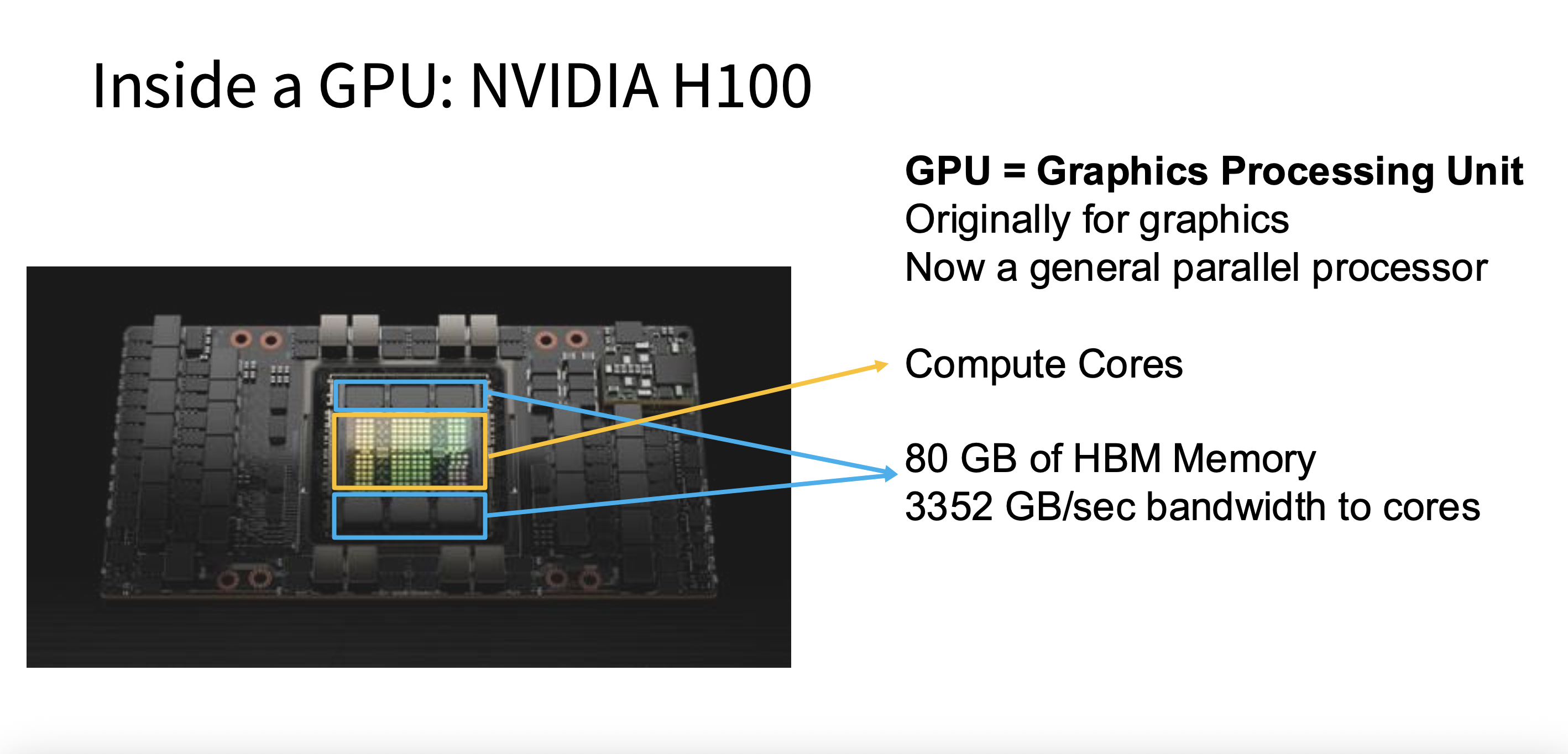

Figure 1: Components of the NVIDIA H100 GPU, including the GPU die, compute cores, as well as the HBM.

Why HBM Matters for Machine Learning

Training deep neural networks involves moving massive amounts of data:

- Weights (parameters of the model)

- Activations (intermediate results during forward passes)

- Gradients and optimizer states (during backpropagation)

If the memory system cannot supply this data quickly enough, the GPU’s compute units stall, leaving much of the available FLOPs unused. HBM ensures that data flows into the GPU at terabyte-per-second speeds, 3.352 TB/s as shown in figure 1 , keeping CUDA and tensor cores busy and enabling efficient scaling to very large models.

Technical Background: How HBM Works

- 3D Stacked DRAM: HBM consists of multiple DRAM dies stacked vertically.

- Silicon interposer: The stack sits next to the GPU die and connects through an interposer with thousands of fine-pitch wires.

- Wide bus, lower frequency: HBM achieves bandwidth by using an extremely wide memory interface (thousands of bits) instead of very high clock speeds, making it both fast and power-efficient.

- Capacity per Stack: Each stack holds a few gigabytes; GPUs integrate multiple stacks for total memory capacity (e.g., 80 GB on the NVIDIA H100).

Performance Metrics

When looking at GPU specifications, two numbers describe HBM performance:

- Capacity (GB): How much model data can fit directly into GPU memory. Larger capacity reduces the need for offloading to CPU memory or splitting across multiple GPUs.

- Bandwidth (GB/s): How quickly data can be moved between HBM and the GPU cores. For example, the NVIDIA H100 provides 3,350 GB/s, compared to ~100 GB/s for a high-end CPU with DDR5.

Real-World Implications for ML

- Larger models: More HBM capacity allows larger models (or larger batch sizes) to fit on a single GPU.

- Faster training: Higher bandwidth ensures that matrix multiplication units are not idle.

- Power efficiency: HBM consumes less energy per bit transferred compared to DDR or GDDR, helping GPUs stay within data center power budgets.

Key Takeaways

- HBM is stacked memory integrated with the GPU package via a silicon interposer.

- It provides terabytes per second of bandwidth , far beyond that of DDR or GDDR.

- For ML, both capacity (fitting large models) and bandwidth (keeping cores fed) are critical.

- Modern GPUs (A100, H100, MI250) rely on HBM to make large-scale training feasible.